Has Penguin thrown you off balance?

If your site was hit by Google’s dreaded Penguin update, there is hope, but it’s going to take a little bit of work to get back to your previous rankings.

The first step is to look at your backlink profile to find those spammy links you built that Google has decided to penalize you for. You’ll need to submit a reconsideration request with Google to show them you have cleaned up all the spammy links and the more details you share with them, the happier it makes them. Don’t rush this step, get all your ducks in a row before kneeling before the King.

Google Webmaster Tools is your best friend

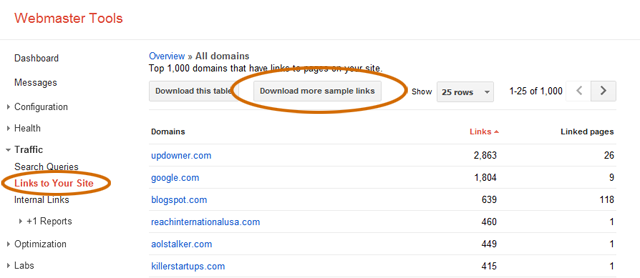

The best way to get started is to log into your Google Webmaster Tools and download the links that Google is showing you there.

The only downside to this is that if you have a lot of backlinks, Google may only show you a “sample” of them in GWT. So you need to download them.

Google's Webmaster Tools backlink interface

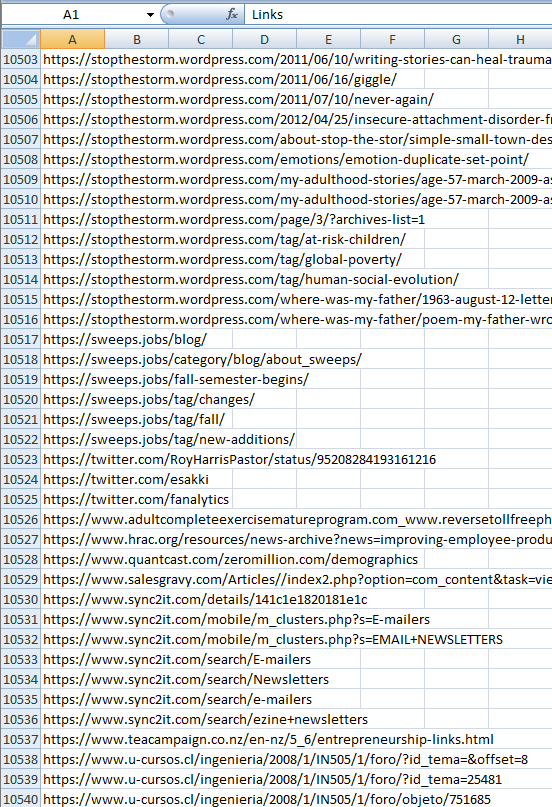

The download will be a csv that you can manipulate in your favorite spreadsheet. In my world, that means firing up Excel and taking a look at just how many links Google sees pointing back at my site. In this example it was 10,540 backlinks. What you’ll notice is that many are from sites where you might have a sitewide link (think attribution blurb if you’re a web development company or blogroll type of link), others may just be from a site where you get a lot of play.

Google Webmaster Tools backlink export

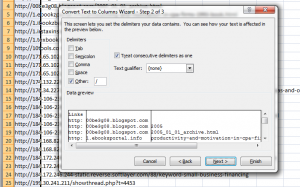

However it worked out, what’s important for us to be able to do is filter the results so we can look at the domains alone. If you are a spreadsheet ninja feel free to use some crazy filter here to pull out the domains, for my purposes I can use a very simple filter to split the urls on the slash to get just the domains.

The nice part about doing it this way is that it allows me to move right on to the next step with the domains fairly clean in a new column. Once you finished the filtering process you should have at least 3 columns. The first will be the protocol column which should just be the http’s. The second column will be the domains. Additional columns will be the directory structures of the sites you have links from.

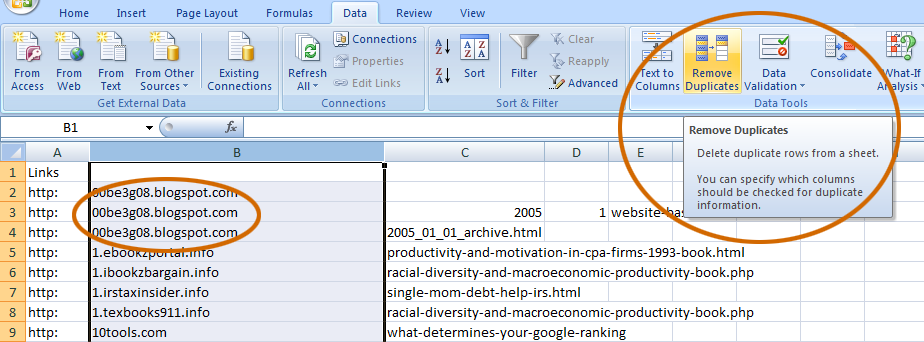

It’s at this point where it will be clear that you have duplicate domains in that second column. So let’s filter those out with the easiest of all filters, “Remove Duplicates”.

Once that has finished you will be left with the domains that are linking back to your site. In my example, there were 7,134 duplicate values found and 3,406 unique values left behind. I would say that’s going to be about average for a good size site, about a 70/30 split. You can recombine the protocol and domains at this stage using the concatenate function.

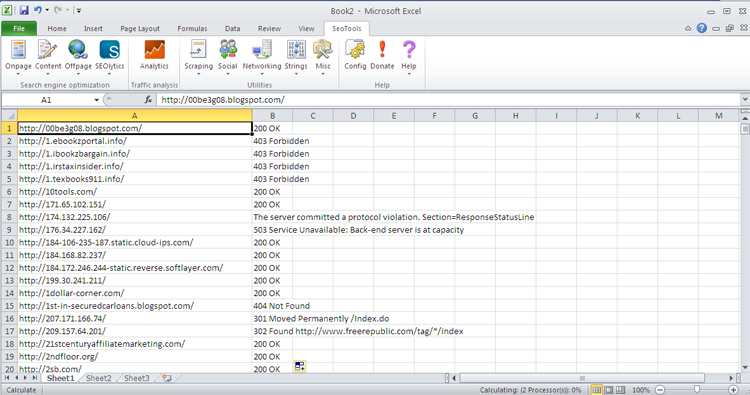

It’s possible that Google has some old links in that collection, so let’s use the awesome Excel SeoTools AddOn to check the current status of the domains. Be patient if you have a lot of links to check, its intensive.

At this point I copy and pasted just the values (to stop from constantly having to query the sites over the net) to a new worksheet for the next step. This is where you filter out any site that returns a non-200 responses. I will move the 301 and 302 sites to another tab to look at, but I really want to focus on the 200 (OK) domains first. Of the original 10,000+ links that GWT showed, we’re down to 2,687 that are active and where they should be.

I wish I could tell you that there is a web based tool you could check all your backlinks and alert you of the suspicious one, but you can do a combination of just plain looking at the domains to see if they look crazy, but if you can’t tell, just go look at the site.

The final step is to make a list of sites you will need to contact to have them remove the spammy link. If you can’t find a way to contact the site owner on the site, try checking the WHOIS record for the technical contact. Beware that many webmasters are now asking to be paid to remove links.

Oh, and if any of this seems overwhelming, you can always contact us and we’ll take care of it for you 🙂

Hey Phil, thanks for the writeup. Penguine… I’m working on a case now and hands down the most difficult part of this is really identifying the poor links (that decision process) and then of course getting in contact with someone to remove in some cases. I think it’s worth pointing out that part of that identification process would be to look for sites with a lot of advertisements (in text popup ads, many blocks of Google Adsense). Another might be to see if they are linking to a large number of your pages and is coming from a broad directory of sorts or a link building directory. Others may be awkward placement of anchor text within an article. Again, thanks for the writeup.

I agree Joshua, manually looking at the backlinks is a pain, but worth it. Some will obviously jump out at you but others may take a little more exploration to ferret out what the problem is.

As far as getting contact information for the sites you want to contact – that is far and away the most difficult part of the process.

Update, the disavow tool saved me a client who had some extremely difficult links to remove that were extra horrible. That tool was a lifesaver, no matter how much I wish it wasn’t necessary.

Awesome post!

Hey what is the filter you use to separate the domain?

Thanks

Sorry Eacsoft I should have been clearer there. If you click the image you can see it a little better… It’s the text-to-column filter which asks you how you want to split the text. I choose to split it on the “/”. That allows me to split the http:// domain /file. The only thing is I do need to recombine them a couple steps later.

The only thing is I do need to recombine them a couple steps later.

Will concatenate put the slashes in for me too, I’m struggling here, thanks for your help so far though.

Cheers!

The concatenate lets you string together as many strings as you want. So you pull in the protocol (http:) the manually add in the double slash (//) then finally add in the domain. So it ends up being a 3 part concatenation.

Hi Phil,

This is really very nice information to fix unnatural links. You have justified it very nicely here for unnatural links. From April 2011 lots of websites have got manual or automated penalty by Google in penguin updates. They all might have taken a few steps, but I am not sure that all or even 50% of websites might be out of manual or automatic penalty. In this case, your informative blog post may help them to come out.

Special thanks for Google’s latest Disavow Tool.